by Niko Mangahas –

In the world of software development there’s one absolute certainty: change happens fast. That’s always been true, but it seems even more so today. Call it technological evolution, a side-effect of Moore’s Law, or whatever label you wish to slap on it. But change in this industry is a snowball rolling down the slope of a snowy hill; building in size and momentum with every passing moment, the bottom nowhere in sight.

The challenge with change, of course, is adapting without getting steamrolled. The business hand that drives enterprise technology teams have certainly adapted to the speed of innovation by setting forth trying expectations of better time to market while expecting superior product quality. And in response, most organizations struggle with what development practices, concepts or combinations thereof would best address the need. QA and Software Testing groups in particular have not been first in mind for where innovation would come from, and traditionally are just expected to adapt to change. That can, and should change – QA and Software Testing leaders can have a seat at the table and be influencers of IT Strategy, and not an afterthought.

More companies are embracing Agile development principles, and DevOps and Continuous Integration and Deployment frameworks are at the heart of IT roadmaps. But these breakthroughs are not completely original, nor are they unprecedented. It was only a few years ago when boardrooms were abuzz with leveraging Cloud and Virtualization, Software-as-a-Service, or Test Driven-Development. Further back, it was the rise of Service-Oriented Architecture and Extreme Programming. Maybe in the next few years, it will be code crowdsourcing or development mobs, or even the use of AI. There are a myriad of possible scenarios and possibilities, not to mention concepts for which we as an industry have not thought up a fancy marketing name. In fact, Forbes.com just published an article less than a month ago that claims “Strategic Planning is Dead”1, and with good reason.

But multi-year, strategic planning is not irrelevant yet – it may just need a new approach. How can QA leaders strategize long-term, if organizations are in flux virtually all time? Instead of trying to map fixed outcomes over several years and tactical, procedural tasks to cross them off the list, it may be more beneficial to adhere to a philosophy which does not change – even if the implementation of it might (and will). That is where the 3 QA Philosophies discussed here are coming from.

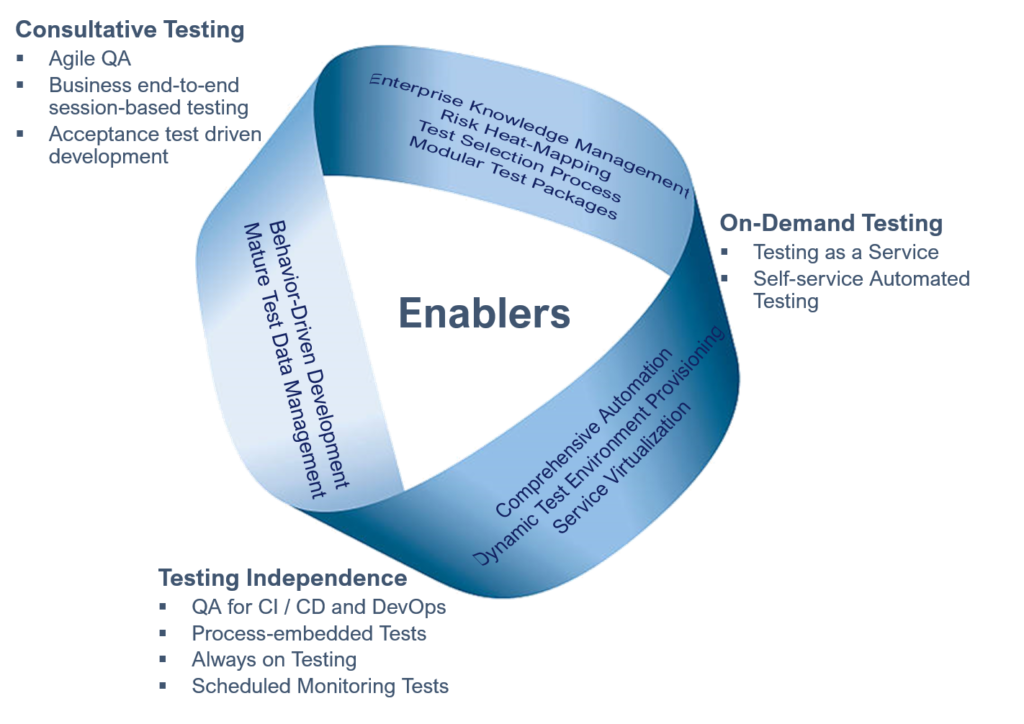

While not pointing to an exact outcome, this concept still strives to steer the strategic planning of software testing innovation towards the direction of a philosophy, and in the process define different ‘expressions’ or implementations depending on your organization’s current culture, strategy, process maturity, and even technological advances that surfaced in the meantime. These are sample implementations provided below, but we should not be constrained to them.

The enablers in the middle are the elements which either act as foundation to a philosophy or connective tissue that integrates two at a time. To pick an example, ‘Consultative Testing’ is the concept of pushing tests more and more to the realm of actual use, and to accomplish that, a mature test data management system, modular test packaging and enterprise knowledge management are the building blocks, and they also support the other two philosophies. Example implementations of ‘Consultative Testing’ are also provided, and that will depend on where your organization is headed, or where it is from in the spectrum of stability and maturity– in this case, it could be transforming Agile QA teams, or enabling Session-Based Testing.

I’ll cover the essence of the QA Philosophies themselves, and how they can manifest in different implementations and approaches, and I’ll touch very briefly on some of the possible enablers, as those may change from the emergence of new technologies.

1) Consultative Testing

Consultative Testing is the QA Philosophy of testers building and sharing knowledge that reflects user behavior and tendencies, and consequently leads to creative and relevant tests on how the system might break, and testing more efficiently. Essentially, the objectives are:

- To make testing more relevant and insightful by anticipating end-user behavior, covering the most frequently exercised transactions and operations including where an end-user may even make input errors. Test scenarios will test both functionality and usability, therefore contributing also to system design.

- To make testing more efficient and faster by selecting and prioritizing user-critical paths first

- To make testing more consultative and expertise-driven, creating better reuse opportunities

Most groups are actually applying some or all of these concepts almost organically – some Agile QA resources may be more “end-user focused” given the time-boxed nature of the sprint. Teams applying BDD (Behavior-Driven Development) are similarly documenting tests from the perspective of the ‘action-taker’ or the end user to rapidly design, search, and maintain tests both done manually and automated. In a similar fashion, ATDD (Acceptance Test-Driven Development) also capitalizes on user criteria. Session-Based testing is a more structured form of Exploratory testing that doesn’t completely rely on just individual expertise alone, but allows to scale the insight of an end-user to an entire group, creating end-to-end flows, better coverage and less effort redundancy compared with basic exploratory testing.

The generation, documentation and sharing of application knowledge and expertise across the enterprise is in itself a significant achievement, one that leads to synergies and blurs the lines between business analysts, QA and UAT. As the industry moves toward more automation, the obvious next step is applying Artificial Intelligence in testing – but this QA Philosophy preserves the importance of humanistic insight into the target application and the qualitative aspects of testing that only a QA professional can tap into. Consultative testing, in my opinion, is irreplaceable and will never be automatable.

2) On-Demand Testing

One of the widely known truths about successfully driving quality into the organization’s culture is that ‘everyone is responsible’ for ensuring it. And cliché as that may sound, there is a significant parallelism to the QA Philosophy of On-Demand Testing. On-Demand Testing is the philosophy of broadly widening the usefulness of testing by bringing it into the hands of as many people as possible, who can execute it with as little delay as possible.

On paper this may seem sensible and even simple, but the mechanics and foundations necessary to create this capability could be unnerving. A true ‘On-Demand Testing’ capability does not just imply that tests are hosted on tools that anyone can access and has high availability – if it were only that, any of the ‘enterprise lifecycle suites’ out there would have sufficed. In reality, different users would have different testing needs and purposes, different scopes – you cannot run the same ten thousand test cases for your quarterly release regression testing and for a minor fix to be deployed over your weekend maintenance window. Therefore, ‘On-Demand’ here should also allow for efficient ways select, group and combine your test artifacts into different configurations as needed – “modular testing”, if you will. And that requires breaking tests into a standard units of scope and effort as a start.

Another challenge with diverse user types is that testing cannot be considered accessible if some users shy away because of the complexity of how it is done, or how to use a tool to run it. This is a particular issue for groups where a variety of automation solutions are used to solve different testing problems.

This may sound daunting, but it is surmountable– in fact, roughly a year ago, our team started developing a platform that addresses these very problems (for the most part). But that is a topic for another time as we only intend to cover the foundations of this QA Philosophy.

3) Testing Independence

A QA Philosophy that can easily be taken at face value, Testing Independence is a massive idea that supports the other two philosophies. This originates from the concept that the more independent the testing is, the more reliable the results, and that self-testing and review yields the worst outcome. But it is time to throw out that old adage – independence doesn’t really work because there are numerous dependencies starting from test data and code availability, the bottlenecks and delays from process rigor in handovers (because everyone is always ready to point fingers if something fails), and the inability to see end-to-end, and consequently fail to optimize the overall release flow – (making DevOps and CI/CD all but impossible).

So what is ‘Testing Independence’ in today’s context? It is still testing from an independent group, but it should also offer up three counterpoints to the same issues independence brings:

- Testing Independence is building the right infrastructure that eliminates test dependencies as much as possible

- Testing Independence is speeding up processes steps and removing bottlenecks in the SDLC

- Testing Independence also contributes towards the optimization and automation of end-to-end release management

Managing test data and environments arguably present the greatest dependencies on outside resources for testing teams. Test teams may depend upon DBAs to create or modify test data, or developers to set-up test environments and deploy all interfacing systems in the right sequence and schedule. Relying on other groups for managing test data and environments inevitably ties QA timelines to the timelines and availability of other departments and should be reduced, if not eliminated.

Test Data Management tools are available from the largest software vendors and even beyond, and it may also be just a matter of reaching out and collaborating with the owners of your Master Data and Reference Data systems – chances are, the DBMS they use already has features, add-on or plugins to spin-off an initial TDM implementation.

On the environment front, the problem may be twofold – it could be the availability of test environments, or it could be the availability of applications in the test environments – a potential answer to both is virtualization, but in different ways. Virtualization and cloud infrastructure can be used, in conjunction with auto-configuration tools to spin off test environments or set up dynamic provisioning. Service Virtualization, on the other hand, allows for uninterrupted testing on one application even in the absence of interfacing applications by simulating service responses. Both of which, applied correctly, will allow the start of testing at the soonest possible time.

While the first two dependencies are QA’s concerns, we also need to address the bigger picture – how does QA contribute to optimizing the release train? Or if relevant to your organization, how do we get ready for DevOps or CI/CD? Addressing it is fairly simple – do not look at your tests as gates, instead embed your testing into the process itself. A good start is having your automated smoke tests triggered to run by every release deployment into a new environment, and setting up thresholds for rejecting a deployment with issues. By adding automated notifications, this simple step will catch deployment and configuration issues, and will eliminate the few hours (or days) it takes to smoke test and roll back code if needed.

The best way to not be a victim of change is to drive it yourself

How well is your QA group strategizing for organizational change? Is it trailing behind or catching up? It shouldn’t stay there, when it can influence and drive change. The QA Philosophies discussed are, at the very least, broad concepts that can help your teams plan long-term while being flexible enough to change. You will notice that every QA Philosophy stresses the importance of contributing to delivery speed and in the same spirit, there is no better time than right now to plan and enact them.

Because even to a casual observer, the world of tech is evolving at breakneck pace, and this means business drivers and the organizations that fulfill them require investment in the right strategy, while being tactically flexible. Change in our industry won’t slow down for you, it will even accelerate – are you in front of the snowball waiting to be flattened, or the one behind and shaping it?

Works Cited

1. Forbes (2016, Oct 23) "Strategic Planning Is Dead. Here Are Two New Ways To Face The Future"

Retrieved from https://www.forbes.com/sites/williamvanderbloemen/2016/10/23/strategic-planning-is-dead-heres-two-new-ways-to-face-the-future/?sh=3362a5dd2f5b