by Hassan Faouaz –

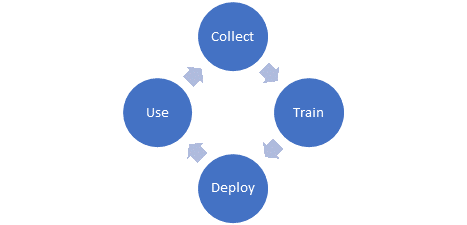

Machine Learning Development Life Cycle (MLDLC)

Machine Learning (ML) and Deep Learning (DL) seem to be the next big thing. Everywhere you read and look, every enterprise is either trying to set the platform to do ML/DL or already using it to solve their complex problems. There are many blogs out there that explain the usage of it, or how to start developing models or deployment of models. Though, what I haven’t seen is the process of capturing the end to end cycle on solving a problem with machine learning.

My goal for this blog is to explain the end to end journey in taking a problem and go through the process of solving it and deploying the solution, while using machine learning. I would like to stay less technical since there is no shortage of articles out there that deep dive into image recognition algorithms and python code to implement it and using AWS SageMaker to train and deploy.

Abstract Problem

Have a system in place that provides data in real time or batch to a machine learning model and as a result of this model the system reacts and prescribe a new outcome. Simple enough!

Plan of attack:

- Collect lots of data. I mean lots of lots of data…

- Have your data scientist and engineers take this data, prepare it, extract the labels, discover all the features and start building models. If the intention is to discover a pattern and build a model accordingly, perhaps use Deep Learning neural network to create the model.

- Once the model is built, tested, and trained, deploy it to be used by your system.

- Have your system feed data to the deployed model, and base on the result of the model, have your system adjust its course or action.

- Use the system as a data collector for step 1.

In summary, keep collecting data, keep training your model, keep deploying and then, repeat.

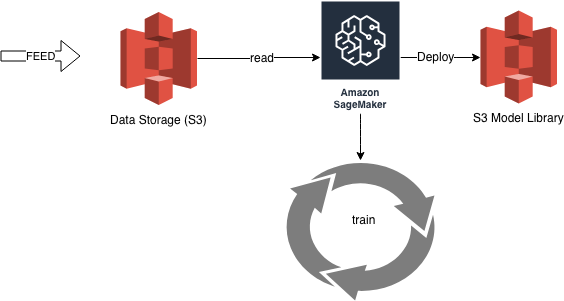

Model design, Training, and Deployment

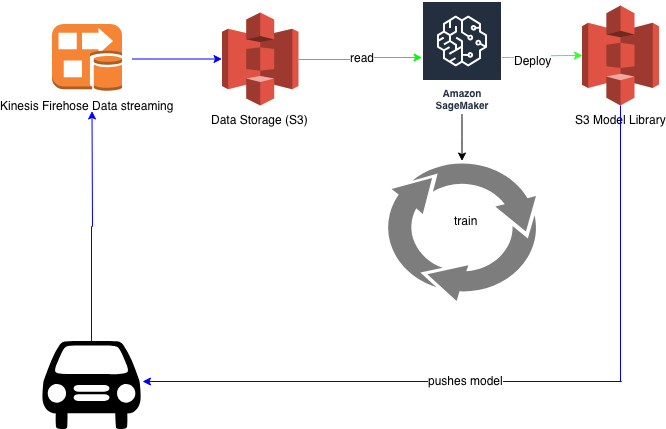

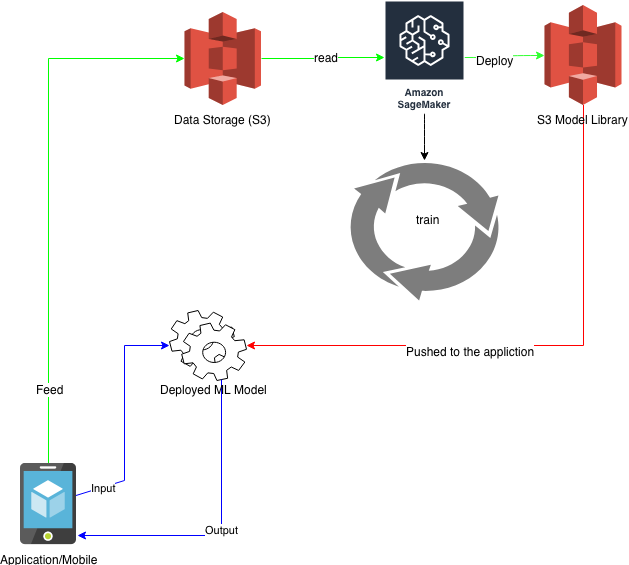

So, below is the life-cycle of building a model on AWS. SageMaker is a managed service provided by AWS that allows you to prepare, clean, load, build, train, and deploy ML models. SageMaker provides the tools and the framework to the data scientist to build and train models. The model could be pushed to the system directly, or it could be saved in a library, or exposed as a REST API and become a microservice for other applications.

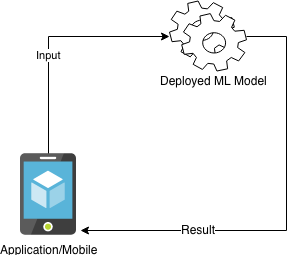

Model Usage After Deployment

Once the model is deployed, the application that provided the data initially to train the model will become the user of the model. The data will be fed to the model in real time, where the model will provide the output to the application. Once the application receives the output, the application can then prescribe an action.

Cycle Process

In order to keep training the next enhanced version of the model with more edge cases and higher accuracy, the application needs to also provide the data to the data scientist and engineers. This is so that they can retrain the next version of the model which is how the cycle gets complete. As the application is requesting output from the model while in use, that same data gets pushed back and collected at the backend. Thus, the cycle is complete.

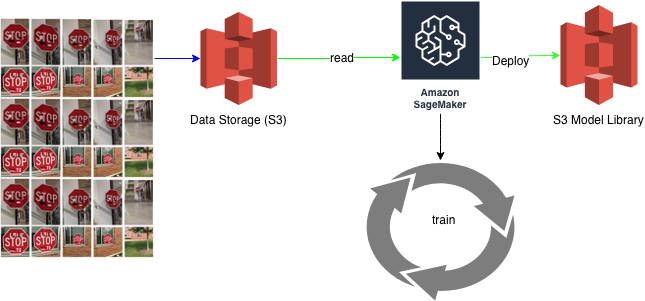

Below is the cycle where the green line shows the feed to assist in building the model, the red line is the deployment of the model to the application, and finally, the blue line is the actual usage of the model.

In this cycle, there is no place where the model is being trained in real time. The model gets created from data that was created over time and then the model is only used in real time at the application level. There are many misconceptions that the model is being trained in Realtime and updating in Realtime. In fact, this is not possible yet, since the model training process needs to run through the data many times before the square error is minimal while the accuracy is still high.

Now since we have a better understanding of the cycle, I am going to go over a real-life use case on building a model to solve a business problem. During the process, I will refer back to the previous figures in this blog and relate the to the real-life use case.

Use Case

Autonomous cars have the ability to self-navigate between lanes, adjust cruise speed, and apply the brakes if the car in front of it stops. What they lack, yet, is the ability to detect a stop sign and apply the brakes when it encounters the sign regardless of the object in front of it. Currently, if the car is in auto pilot mode and the road happen to have a stop sign; the car will simply ignore the stop sign and keep going. The reason for that is because it’s not, yet, designed to detect the stop sign and apply the brakes. (maybe it does, but for the sake of this blog assume it doesn’t!)

Possible Solution

Have the car detect a stop sign and in return apply the brakes, alert the driver, and disengage the auto pilot. Simple enough!

What we ultimately need to do?

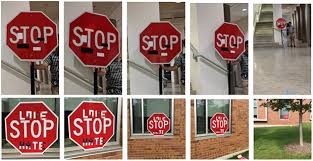

We need to train an ML model to recognize a stop sign. We need to train it to recognize the universal symbol of stop sign and color. Once the model is able to identify a stop sign regardless of language, size, the shade of reds, then we can take this model and integrate it with the car. By doing so, the car can now detect the sign and gently easy on the brake and stop before the stop sign pole.

Building and Training the Universal Stop Sign Symbol.

From our ML develop life cycle steps, we are going to work on step 1 – 3. In order to develop a machine learning model that can identify a shape, we need to provide the model with many images of the shape and color. It is important to have it trained against them in order to successfully create a model with high accuracy in detecting the Stop sign.

I would start collecting many different images of the stop sign, different sizes, different angles, different shades of the red and persist them into a storage location. Once I have enough data, it’s time to start to build the model and train it. This process is iterative until the model has a high accuracy of detecting the image, then the model will be ready to be deployed. In this scenario, the best framework and algorithm to use to help identify images is deep learning image detection algorithm using computer vision. Again, many blogs out there discuss this particular DL problem in detail.

Model Deployment to the Car

Disclaimer. I am using Tesla as an example along with AWS as the cloud center. Both of them are not to be taken literally since Tesla or AWS never shared this information with me and never disclosed their cloud solution with me. This is more for information purposes.

Now once the model is ready, how do you deploy it to the car and how is the car able to provide it with the stop signs? Tesla’s cars have 8 cameras installed along with an active 4G LTE connection to the cloud where a computer on board is always connected to it. At night when the car is in your driveway, Tesla’s machine learning lab pushes the model to your car to be used by the car.

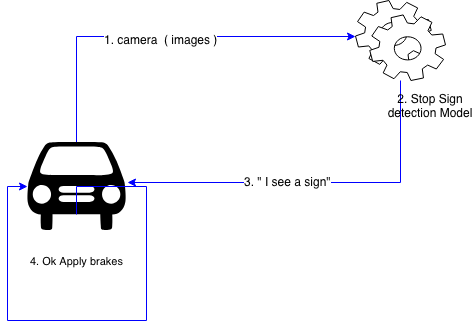

Once the model is pushed, the car is able to use it and can react to it. But since this model requires images to detect stop signs, guess what, the camera on board of the car will provide the input to the model. The model is now able to tell the car that a stop sign is within range and the car will gently apply the brakes, alert the driver and disengage the autopilot. Remember that there is a computer on board and it just another application reacting to an output.

Closing the Cycle with the Car

Since the car has an active 4G LTE connection, every image captured by the car is sent to the Tesla’s machine learning lab with all the possible cases of stop signs. The data is collected and again used to build a smarter model to be pushed back to the car.

In conclusion, the Machine Learning Development Life Cycle is a cycle that feeds on itself and enhances the model over time. The only way for the cycle to end, is if the model no longer holds a purpose.