by Bob Lambert & Robert Santos –

Reduce the World’s Infestation of Defective Mobile Apps

It’s an unfortunate fact: defective mobile apps have infested the world. You know this is true from your own experience. How many times has a mobile app annoyed, frustrated, even angered you because of bugs that weren’t caught during the development process?

It’s not just your imagination that lots of mobile apps are defective. An article published in CIOa while back (No Room for Buggy Apps in the Corporate World) even compared mobile app development to Nigerian email scams: “promising anything for the chance to make a buck.”1

Ouch!

And even the largest, most prestigious of companies add to the global infestation by releasing apps that are just loaded with bugs. A recent Computerworld article2 spotlighted defective apps released by Rite Aid and Applebee’s, both multi-billion-dollar companies.

The Worst Defective App

Defective apps that you download and use yourself can certainly be pesky. But the very worst kind of defective app is the one that your organization developed.

Your people brainstormed it, designed it, and developed it. And then your company released it to the public with high hopes for much success — only for the public to decide that it’s considerably less than impressed.

The fact that some of the largest companies on the planet are making the same mistakes by releasing defective apps is cold comfort. Because each defective app your company releases will exact a fearsome toll in diminished public goodwill, and eventually, diminished profits.

In today’s world, in fact, there may be no more effective way to drive consumers away from your brand than with defective apps. A Compuware study3 found that consumers have little tolerance for defective apps and the brands that produce them. Nearly 90% of apps are downloaded, used just once, and then deleted.

The message is clear: if your app doesn’t provide instant gratification, your app will be unceremoniously discarded. And the consumer will look elsewhere — most likely to your competitor — for satisfaction.

What’s the Answer? Mobile Test Automation

Why are so many mobile apps riddled with defects? Is it because development teams have simply abandoned testing?

Of course not.

The real reason lies rooted in the fact that humans are not perfect, and that the old ways of testing are inadequate for the age of mobile development. QA analysts and testers do their best to catch all defects before releasing apps to the public. But defects still persist, even after the finest manual test techniques and processes have been exercised.

The answer to this problem is test automation. The addition of test automation to the app development process will increase the effectiveness and efficiency of mobile testing.

Consider the manual testing process…

Manual testing is performed by first downloading the app and then installing it on mobile devices. It is followed by step-by-step functional validation for every scenario. Results are compared to the expected behavior, but regression may lag if an area of functionality was deemed not to touch that functional area. Finally, the test results and summary are recorded. Also, manual tests are typically repeated often during the development phase as source code changes are implemented, and to assure performance on a range of different devices.

Test automation can perform all of those tasks. Human QA analysts and testers are still needed, but machines can and should take over all of the repetitive tasks. Machines, after all, do not lose concentration or limit themselves to a particular area of functionality unless directed to do so. They don’t overlook potential problems because of fatigue or disinterest. For repetitive testing, machines are indisputably more reliable and faster.

Many companies still rely completely on manual testing — most likely because they lack the ability to implement and integrate mobile test automation into their mobile app development process. Yet there are also many savvy companies who have found mobile test automation to be a vital component of successful app development projects.

How can you tell these two types of companies — the purely manual testers and the automated testers — apart? Compare the rates of consumer complaints about their mobile apps.

Setting Up Effective Mobile Test Automation

Mobile device usage continues to skyrocket, and mobile app downloads are reaching record-breaking numbers. As the mobile surge continues, many languages and tools have emerged to enable “Mobile App developers” to create more cool apps. These new tools can also help “Mobile Test developers” improve app quality. For now, let’s focus on the latter.

Some tools are dedicated only for iOS development, and some just for Android. Some tools are suitable for both: Appium, Calabash, and MonkeyTalk, to name a few. In designing and building an effective test automation process, we should first research the most appropriate tool that best suits our needs and fits into our overall testing framework.

For example, we choose Appium for a recent project. Why Appium?

It’s an open source project for cross-platform test automation that’s bolstered by a vibrant contributing community. The code is in GitHub, which we can download for free. And there’s a huge, energetic community contributing enhancements and bug fixes, and that’s also very responsive to inquiries.

Appium supports most any language like Ruby, Java, C#, Php, Python, etc., and any test framework like rspec, cucumber, minitest, etc. Unlike other tools, Appium permits selection of any of the programming languages and test frameworks. Those selected will depend on your level of expertise and preference, as Appium libraries provide binding to different languages.

Also, Appium supports iOS and Android test automation, and tests can be executed on emulators and real devices in parallel. Finally, Appium supports not just native and hybrid apps, but also web apps.

Mobile Test Automation Architecture

The accompanying graphic portrays a proposed high-level architecture for mobile automation.

At the top, you will see iOS and Android connected to Appium. They are connected to Libraries encapsulated per individual feature. Features under libraries are granular to make it common and public for other mobile apps as well.

At the bottom layer are Scripts, Elements and Domain-Specific Libraries (DSLs), which emphasize the particular mobile app functionality.

On the right panel, there is continuous integration, deployment, and delivery, or what we call automation release. Then there’s scheduling of test execution, parallel testing to distribute the load, execute the tests on different devices, and then automated reporting of test summary results.

Journey to an Effective and Efficient Test Automation Framework

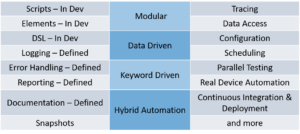

The table below is a listing of some of the primary building blocks we incorporate in building out our customized mobile test automation framework.

This type of test development is brittle so as we progress, we will do some abstraction by decoupling the code and moving reusable methods to DSLs. You will also see some features marked as ‘must definitions’ such as DSL, Logging, Error Handling, Reporting, and Documentation. (Defined simply means that features are ready to be injected into the framework.)You will notice that scripts and elements are in development. This is because they are the most important ingredients of test automation. However, it’s considered as a linear type of approach which is somehow similar to record-and-playback automation or standalone automation.

As linear automation is defined, the automation team will isolate code which will become a part of the DSL.

Logging features are defined to generate log files for every test run. This provides the ability to track how a particular test performed by just opening the log and checking the steps that were executed. These logs are also highly useful for failure investigation, with the information in the log identifying precisely where the test failed.

During error handling, messaging should be very descriptive and user-friendly so that location of the defect can be pinpointed without ambiguity. (Never vague or too cryptic).

The job of the Reporting feature is to generate a report containing a test summary of automation test results that were executed in the test bundle. The job of the Documentation feature is to automatically generate the documentation of all the methods in the code, making it easy for others to understand what the methods are doing.

The Snapshots feature captures screenshots — especially useful if we encounter failed tests. The information provided by Snapshots aids the testing team in identifying the reason(s) for a test failure.

The Tracing feature will provide benefits to our test developers during debugging activities. And the Data Access feature creates SQL queries, calling them during script execution for test data creation and manipulation (an example: pulling a particular product from the product database instead of hardcoding test data in the script).

The Configuration provides the basis for pointing the test set to different mobile devices and app versions. This allows the testing team to schedule alternative test sets to run the tests in a bundle at a specified time and subsequently send the test summary results. All of the above allows parallel testing and distributes the load of the automated testing process.

Other influences play a role in the process: efforts to move to continuous integration, deployment, and delivery. This allows the team to utilize tools such as Visual Studio Team Services, Team City, Octopus, or Jenkins. Doing so will facilitate the idea that every test automation code set created and merged will automatically build and deploy in the automation lab tagged with a new release version (without human intervention).

Roadmap and Approach

The image below shows the mobile automation roadmap.

As the graphic illustrates, this process involves six phases:

Phase 1: Consists mostly of linear scripting and elements recognition for automating basic test scenarios, so that the testing team can begin modularizing the scripts by moving reusable methods to their respective DSLs.

Phase 2: Consists of injecting the logging, tracing, reporting, and error-handling features. In this phase, the modularized code will begin to become integrated into the overall testing strategy based on the regression coverage plan.

Phase 3: As we evolve the automation approach from a linear type of scripting, we will make it data-driven and keyword driven.

Phase 4: Increase the framework features like documentation, data access, and configuration creation. This is to make the test scripts more dynamic and easier to understand.

Phase 5: Move the framework for test automation to more of a hybrid model, and begin to improve the scheduling of the tests for parallel testing.

Phase 6: Aligns the framework for continuous integration, deployment, delivery, and improvement.

What Do You Stand to Gain from Mobile Test Automation?

Mobile test automation can provide your organization with a range of very important benefits, including:

- Return on Investment: Mobile test automation saves time and money. Tests are typically repeated often during development cycles to ensure quality. Each time source code or the operating system is upgraded, the modified software tests should be repeated. Doing so manually is time-consuming and costly. But with test automation, we can run tests over and over again, as needed, at no additional cost — and much faster. To illustrate the speed that can be realized, consider the following example: Typically, 1 manual tester can test 50 scenarios in an 8-hour day. Using a 1000-test-regression-set, the tests can be completed in less than a day. Without automation, it would take days.

- Continuous Execution: Machines never get tired. Automated testing can run all day and all night, 24/7. And you don’t have to be on hand to supervise; no matter where you are or what you’re doing, your automated testing will be hard at work. You can schedule the time to start the tests, and have the results sent to you after execution.

- Increased Test Coverage: Test automation increases the rigor, depth, and scope of testing, which in turn improves software quality. Automated testing can easily accomplish tasks that are difficult for manual testers, such as validating memory, database, and file contents. Test automation can easily execute thousands of different complex test cases during every test run, providing coverage that is simply impossible with manual testing.

- Accuracy and Reliability: Test automation is far more reliable and accurate when running the boring, repetitive tests which are essential, and which cannot be skipped without putting product quality at risk. Automation also provides consistency by performing the same steps precisely each time they are executed, and by never failing to record detailed results.

- Reduced Costs and Improved Quality: Test automation moves the regression cycle into the development phase, allowing the delivery team to identify bugs/defects far earlier in the development process. And that will translate directly into reduced development costs, reduced manhours, and enhanced product quality.

By implementing mobile test automation, your testers will be freed from the repetitive manual testing that humans perform poorly, and instead, have more time to create new automated software tests and deal with complex features.

As a bonus, you’ll be doing your part in helping fight the global infestation of defective mobile apps. And you’ll also be attracting a very nice infestation of happy consumers into your customer database.

To Learn more:

Subscribe to receive more blogs like this from RCG

#IdeasRealized

Works Cited

1. CIO https://www.cio.com/

2. Computer World (2013, Mar 12) Retrieved from https://www.digitaltrends.com/mobile/16-percent-of-mobile-userstry-out-a-buggy-app-more-than-twice/

3. Computer World (2015, Sept 24) Retrieved from https://www.computerworld.com/article/2986160/mobile-apps-publish-first-test-never.html